AR Session Tracking and it's implications for developers

Night Mode

Intro

How can this be accomplished?

How does it work in practice?

What could possibly go wrong?

A quick glance at ARFoundation

Introducing XO

A quick introduction to Cloud Anchors

Instructive tracking status information

Hiding trackables

Anchors and tracking

Having a fallback in case of anchor errors

Is this fool proof?

Seamlessly interweaving these two components is crucial to provide an immersive AR experience. To achieve this we resort to using meticulously engineered toolkits that provide us with the ability to track a correspondence between virtual and real entities, including the user’s device itself.

How can this be accomplished?

This concept of estimating a device’s position relative to the environment is called positional tracking. This method comes in two flavors:

* **Inside-out** - using sensors placed inside the device that is being tracked

* **Outside-in** - using sensors placed in stationary locations in the environment

What I want to focus on today is the former alternative and some of its potential issues that need to be taken into consideration when developing an application.

How does it work in practice?

To better understand some of the mechanism at play let’s have a quick look at how modern toolkits, such as Google’s ARCore or Apple’s ARKit, determine the device’s position and orientation relative to the environment.

The fundament of this process is called “simultaneous localization and mapping” a.k.a. SLAM. In a nutshell, what SLAM does is it creates a map of reference points (these points are generally called “feature points” in the AR jargon) and returns the device’s position relative to these points. The feature points are gathered by a camera throughout the whole session and are used to continuously adjust the real-to-virtual correlation.

What’s more, the majority of devices used to run AR applications are equipped with an IMU that allows us to derive their orientation in the real world and in turn visualize the virtual entities from a correct perspective as the user moves around the environment.

That’s the key concept that concerns augmented reality applications - they first need to gather some information and gain understanding of their surroundings before they can reliably provide effective results.

All this allows to improve the overall experience and further enhance the illusion that the virtual content that is presented to the user is part of the real world.

What could possibly go wrong?

All of this sounds pretty peachy, and rightfully so - in addition to these mechanisms being very powerful on their own, they introduce a plethora of possibilities such as plane tracking or creating anchors in the environment that are shared between multiple devices.

What needs to be pointed out though is the fact that all these computations involve a fair amount of uncertainty and the end result is imperfect. This doesn’t pose a problem for most AR applications, as what SLAM has to offer is usually good enough. The real challenge is trying to make the user behave in a way that allows the session to properly initialize and handling all the cases when it doesn’t.

This whole system operates on the assumption that the view from the camera is relatively clear and the device’s surroundings are well lit and contain some distinctive features, like sharp edges etc. When this is not the case we can expect to see errors and inconsistencies in the app’s behavior. We cannot be sure that the user doesn’t make any sudden motions that blur the camera or that his environment isn’t featureless - we need to plan ahead to be certain that our logic handles these situations in a thorough and user friendly way.

Before diving in to tackle this problem, let’s build a common layer of abstraction above all the internals. One of the most commonly used frameworks when writing augmented reality software is Unity’s ARFoundation. It wraps the aforementioned ARCore and ARKit into one coherent toolkit that allows us to build multi-platform AR applications. We’ll continue our deliberations basing on it’s API.

A quick glance at ARFoundation

In ARFoundation the AR session is governed by the ARSession component (there can only be one of them in the scene lest they conflict with each other). It’s responsible for controlling the whole lifecycle and configuration of the session. There are two properties of this component that are especially noteworthy in the context of this article - state and notTrackingReason.

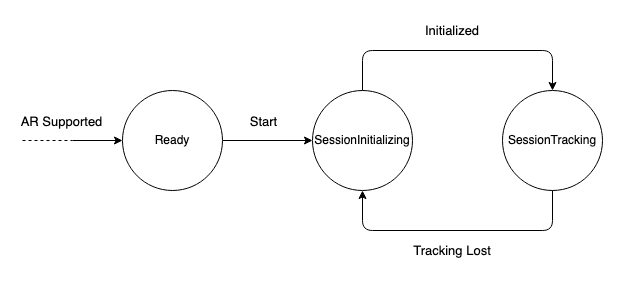

The ARSessionState contains information about the whole AR system’s state. We are particularly interested in two of it’s fields (the rest can be found in the documentation):

* **SessionInitializing** - an AR session is booting up. The process of gaining initial information about the environment is performed while in this state.

* **SessionTracking** - an AR session was successfully initialized and is currently tracking.

The ARSession alternates between initialization and tracking. We can visualize this relation with a very simple state machine:

The session has to be tracking in order for the whole AR ecosystem to work. It becomes apparent that in order to make the experience as consistent as possible we should aim to keep the session in this state at all times.

Sadly, this is easier said than done. There are many potential reasons why the session would lose tracking. In ARFoundation they are represented by the NotTrackingReason Enum. We’ll explore the ones that are related to improper conditions after the session was already successfully initialized:

* **ExcessiveMotion** - the device is moving too fast, resulting in a blurry image that prevents finding feature points.

* **InsufficientLight** - the current environment is too poorly lit. An example scenario would be turning off the lights in the room.

* **InsufficientFeatures** - there are too few detected feature points. An example scenario would be pointing the device at a blank wall.

These are the things that we have to account for happening and believe me - they will happen. Even when the environment is relatively well suited for tracking, the way the user acts still has a huge impact on whether or not the session is tracking.

Let’s explore this notion in the context of XO - an ARFoundation based multiplayer game relying on processing input from multiple users simultaneously and synchronizing common reference points between them that we have been working on for some time now at Upside.

Introducing XO

The idea is simple - two players in the same room place blocks around a common starting point and the first person to get 5 in a row wins. You can think of it as an augmented reality take on regular paper-and-pen tic-tac-toe, with several twists that make it more appealing as an AR game, such as replacing the turn-based model with cooldowns between each block placements.

The interesting part here is the “common starting point”. It implies that both devices that take part in a game share an understanding of the environment that they are in. In practice this means that both players took the time to move around their environment and provide their devices with sufficient information to accurately resolve not only positions that are relative to them, but also those that were created by the other players device.

On top of that we still have to handle the session losing tracking mid-game. What would happen if someone loses tracking while trying to position such “common point”? Furthermore, how can we guarantee that both of them are even in the same room and how would the app behave in the situations when they are not?

In practice, if not properly handled, these sets of circumstances lead to either an unpleasant gaming experience or inability to play at all. That’s why it’s crucial to come up with a strategy that not only prevents these issues from breaking the application but also guides the user to properly initialize the session and provides information how to recover in case of tracking problems.

A quick introduction to Cloud Anchors

Before we continue, let’s have a brief rundown of how the concept of sharing points between players works. These common points were christened Cloud Anchors by Google.

Regular anchors are used to bind an entity to a trackable (a plane for example). They ensure that our virtual objects stay in one place after we have created them in the context of an anchor.

Cloud Anchors are basically anchors that are hosted in the cloud. The hosting player sends all the data they gathered about their environment, along with a “regular” anchor, to the ARCore Cloud Anchor service, along with the position of which they want to host. The service creates a map from data it was given, in which it positions the anchor and returns a unique ID to the host. Other players can now resolve the anchor querying its ID from the service.

Now we have gathered all the technical knowledge necessary to understand and combat the issues we are facing. Let’s dive right in.

Instructive tracking status information

The first question that we need to ask ourselves is: “How do we inform the player about tracking interruptions?”. This question is important, as it’s the answer to it that will shape the whole process of solving tracking related problems and the more specific fixes will have to be built on top of it.

The obvious remedy is displaying some kind of notice that informs the player about the steps they need to take in order for the application to work properly again. We can use the information provided by the API to display a simple, yet informative notice. Extracting it from ARFoundation is as easy as accessing the sessions parameter:

```csharp

switch (ARSession.notTrackingReason)

{

case NotTrackingReason.ExcessiveMotion:

// logic

case NotTrackingReason.InsufficientLight:

// logic

case NotTrackingReason.InsufficientFeatures:

// logic

...

}

```

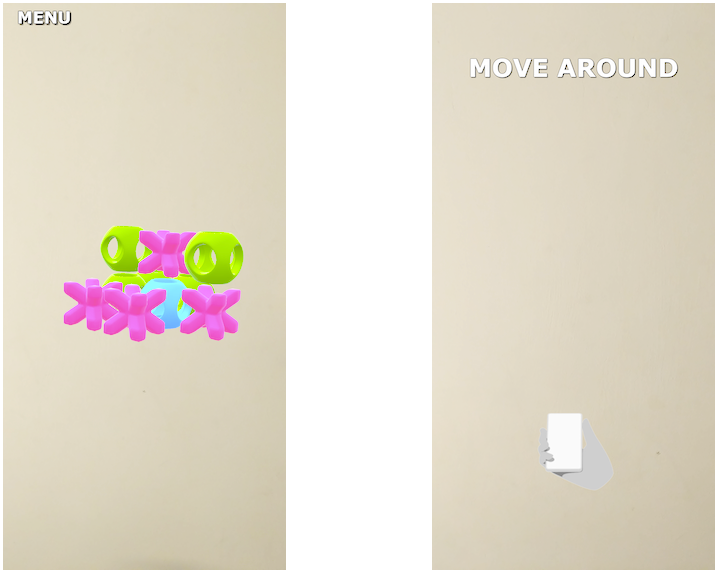

Using this we can suit the messages we send to the player to the actual reason why the session is not tracking. In XO we also added some visual flair in the form of an animated hand doing a scan gesture, suggesting the player that they need to move a little.

Also, a quick side note - the key here is to find a balance between not keeping the user in the dark and not bombarding them with technical gibberish that, in the end, means nothing to them. It’s best to stay as generic as possible with these messages. I’d also suggest focusing more on how to solve the problem than on what actually caused it, for example try replacing “It’s too dark!” with something in the lines of “Find a place with more light”.

Hiding trackables

Another thing worth mentioning is that every virtual object currently positioned in the environment is fully dependent on the ARSession. What this means is that the user is in for an unpleasant show if he loses tracking in the middle of a round - the objects start drifting from their anchored positions and then appear to be “stuck” to the camera, moving along with its view.

This was especially true in our case since we heavily relied on raycasting and user input. The most elegant solution we came up with was actually tied to the previous paragraph - hiding everything apart from the “tracking lost” notification instead of displaying it on top of what’s happening in the environment.

Sounds pretty brutal, eh? Well, turns out that in this case it works beautifully and actually fixes some other issues that otherwise would have been handled separately.

Anchors and tracking

As one would expect, the whole anchor functionality is tightly coupled with the session’s tracking mechanism. Not only in the sense that in order for the anchor to be accurate we need a good map of the environment, but also that it’s strictly required for the session to be tracking in the moment of the anchor's creation.

This can lead to a straight up NullReferenceException when we want to create a Cloud Anchor:

```csharp

ARReferencePoint anchor = referencePointManager.AddReferencePoint(pose); // null

ARCloudReferencePoint cloudAnchor = referencePointManager.AddCloudReferencePoint(anchor);

```

That’s why in XO it made sense to flip the switch when tracking was lost - not only was it more user friendly but it also happens to handle all the mechanisms that require the session to be tracking. It’s also very easy to implement by swapping the main game view with the aforementioned notice when tracking is lost and vice versa when it’s regained.

Having a fallback in case of anchor errors

In XO one of the players chooses a point in the environment and hosts it as a Cloud Anchor, which is later resolved by the other player. This means that the only information that flows between the players is the Cloud Anchor’s ID, given to the host by the service. This is where another minor issue might become apparent - what happens if hosting or resolving of an anchor fails with an error?

There are many reasons why this can happen, most of them are either tracking related or internal problems within the API. What’s also worth mentioning is that since ARCore SDK v1.12 resolving an anchor no longer fails when the ARCore Cloud Anchor service is unreachable or the anchor cannot be immediately resolved, the API will retry until detached instead, so also keep this in mind when using this mechanism.

Unsuccessful attempts to host or resolve an anchor can be easily handled by defining a default position, relative to the tracking device, and a special tag that informs the guest player of the failed attempt to host an anchor. In case of XO the default position is simply coded to point in front of where the device is currently facing. Both players then revert to the hardcoded position and continue the game as usual. This way we can guarantee that even after an error, the user can still comfortably play the game.

Is this fool proof?

Short answer: no.

What I wanted to do in this article was to sketch a versitale approach to handling tracking related issues. The general concepts I presented apply to most augmented reality applications you'll come across. Nevertheless, keep in mind that each app has its own quirks and characteristics that require the developers to come up with unique approaches to harness them.

The cornerstone of any augmented reality application is the ability to keep track of both the real world, captured by the lens of a camera for example, and the virtual world existing only in the context of a given application.

Explore More Blog Posts

The AI Design Gap: Moving Beyond One-Way Generation

There are plenty of tools capable of generating code from designs or directly from prompts. In theory, this looks like a dream scenario. It drastically shortens the journey from design to frontend development. The handoff is quicker, the design is easier to implement, and everyone involved is happier. Right?

Migrating an ERP-Driven Storefront to Solidus Using a Message-Broker Architecture

Modern e-commerce platforms increasingly rely on modular, API-driven components. ERPs… do not. They are deterministic, slow-moving systems built around the idea that consistency matters more than speed.

Automating Purchase Orders in E-Commerce: How Agentic AI Handles Unstructured Input

In an era of connected e-commerce, it’s easy to assume that every order flows cleanly through APIs or online checkouts. The reality, however, may be very different, especially in industries where B2B and wholesale operations still rely on unstructured, offline, or legacy input formats.